I’m old, not that old, but old. I’m 45.

I started my programming career in 1998 as a Waterloo intern. I worked at Amazon in January 2001 when it was only 400 engineers. This is all to say, I had to rely on web search to do a software engineering job before Google was relevant. Google existed but it wasn’t popular yet. By the time I started my second stint at Amazon in May 2002, Google was the standard and building software was a lot easier because of it.

When I use Cursor or any other coding agent, it feels like searching the web in 2001. You’re pretty sure what you want is in there if you just use the right search engine, in this case, model, with the right search query, in this case, prompt. Most of the time you leave disappointed. But sometimes, magic.

In the reductionist view, web search was one innovation, page rank, away from awesome. Are coding agents similarly one innovation away from awesome? I hope so. This article will discuss.

Web Search Pre-Google#

As I work through each method of finding things on the internet in its infancy, I think anyone who has tried to use a coding agent to do a software engineering task will start to do some pattern recognition and understand why my history and experience are informing the thesis of this article.

In the late 1990s, the early days of the internet, finding things on the internet was hard. There were two different approaches to finding what you want: browsing and searching.

Browse#

Browsing involved traversing a set of increasingly more specific categories until you landed on the content you were looking for. This approach to finding content was pioneered by Yahoo! (exclamation mark for effect), which stayed the most popular “web directory” until that category was rendered obsolete by search.

To use a web directory, if you wanted to see what is up with Michael Jordan, you would hit up the Yahoo homepage, click sports, click basketball, click NBA, click Chicago Bulls, click Michael Jordan, and then finally be presented with every webpage Yahoo! had categorized as relevant to Michael Jordan. Yes, it would take seven individual actions to find web pages about Michael Jordan.

On the backend, creating these browse hierarchies was a mix of human and computer curation. New or updated web pages would be found by a web crawler. A computer would generate categories for the page using some mechanical method like keyword frequency. If a page was really popular, as in the crawler found it a lot, a human may look at it and add or remove some categories.

It may shock some today, but for “head queries” (ie. trying to find popular content), browse was often more efficient than search. This is how bad search was. You were more likely to find relevant content about Michael Jordan by click diving a Yahoo! browse hierarchy than by typing Michael Jordan into a search box.

The problem with using the internet to do a software engineering job, especially back then is that there just wasn’t that much content on the internet about software engineering. It was there but it wasn’t plentiful or popular. Finding this content was a “tail query” (ie. trying to find esoteric content). Finding esoteric content was uniquely a problem for the browse method of finding things because structurally, browse relies on category popularity to “rank” the content. If what you’re looking for is 36 layers deep in the hierarchy, you’ll never find it because the system hasn’t categorized content that deep.

Say you wanted to debug a weird Apache error message after you installed a certain version of modcgi. Maybe there is a thread on the some Apache message board where two nerds, maybe even one was the dude who wrote modcgi, were remotely pairing a debug session. This would never show up in a browse hierarchy so you had to rely on the alternative to browse, search.

Search#

Most people today are familiar with the search interface. Type a query into a little box and be presented with a list of relevant links related to your query. Before Google there were a plethora of search options all of which returned wildly different results. You had among others, Alta Vista, Excite, Lycos, Infoseek, and Ask Jeeves. To find what you were looking for you would often try many queries on each one hoping that you’d find the nugget you were looking for. In fact, if my memory serves me, there was a search engine called Dogpile that would do this for you.

Going back to our modcgi example, to attempt to find an answer to your problem, you would go to Alta Vista, type something like “Linux Apache modcgi error <error message>” and see what you got back. 99% of the time the results would be irrelevant garbage. So you’d try Lycos, then Excite, maybe slightly modifying your query, hoping for that moment when that message board thread would be in the first ten results. Note, you have no idea whether this content exists or not, you’re just hoping to fix your problem because your alternative is to just fail at your engineering task. So, you poked at that search box for hours hoping for magic. Maybe a smarter person could attach gdb to Apache and step through until the error was pinpointed but your just an intern who doesn’t know what gdb is yet. So, for you, search and hope for magic.

On the backend, search was similar to browse. You had a web crawler gathering new and updated pages from the internet. Then, instead of doing keyword extraction for categories, you would do it for a large flat keyword index. You would use this index and some proprietary ranking magic to generate a list of “relevant pages” for a query and then rank order a list back to the user who queried.

I never worked at a search company but judging from the results, there was a massive difference in each search engine’s crawling, indexing, and ranking capabilities and even approaches. In fact, I think there was an explicit trade off that no one before Google had solved in that the more you crawled, the bigger your index was, the harder both computationally and logically it was to rank. So, some of the engines explicitly ignored some areas of the internet or used hierarchical indexing, a sort of combination between search and browse.

The idea that you could suck in the whole internet and then just rank it such that a human would just implicitly trust that the search engine gave the “right answer” was plausible and seemed within reach. But that is not what searching the web was. Searching required equal parts skill and equal parts patience. Young engineers had an implicit advantage with this technology because they had no alternative. It was search or die.

Web Search After Google#

Now that I’ve set the stage for what finding things on the internet was like before Google, it’s time to discuss the ascent of Google and what it meant for the internet.

If you go off lore, internet search was one innovation, PageRank, away from “just works”. In my words above, you could suck in the whole internet and then just rank it such that a human would just implicitly trust that the search engine gave the “right answer”. Google invented PageRank, applied it to a sufficiently large web crawl, and the Google you know today existed. All other search engines immediately went out of business.

History is a bit more messy than that but looking back it kind of felt like that is exactly what happened. I’m sure between Google being founded in September 1998 and my memory of Google being ubiquitous in 2002, thousands of innovations happened to make Google web search just work. Some of those may have been related to PageRank and others may not. Some innovations may have been related to the scale of queries driving feedback loops within Google that could not be achieved by other search engines. But, history is certain, over the course of five or so years, Google made internet search work so well that you wouldn’t consider using another search engine. To this day, you can reliably type even a half baked query into the Google search box and reliably find what you are looking for.

The main point I’m trying to make is, one company, Google, made web search easy and ubiquitous and it didn’t take that long. Before Google, you thought web search might work. After Google, web search just worked.

Additionally, Google made the whole internet better through a virtuous cycle. People could find what they were looking for on the internet so other people put more content on the internet so there was more for people to search for. Turn this flywheel a few times and you have the internet we have today.

Using Coding Agents Today#

Here at DoltHub, our disappointment with coding agents is on the record.

I have my own experience with Cursor. I’ve been hearing all about how coding agents are making one engineer do the work of ten and I wanted to be the 10X engineer I always dreamed of being. I set out one afternoon to use Cursor to fix this bug. Basically, we want a feature of dolt table import to skip a header row in a CSV import. I had a rough idea what needed to change and I know Dolt very well but I’m not a Golang expert. I thought this was a perfect getting started task for me and my coding agent to pair on.

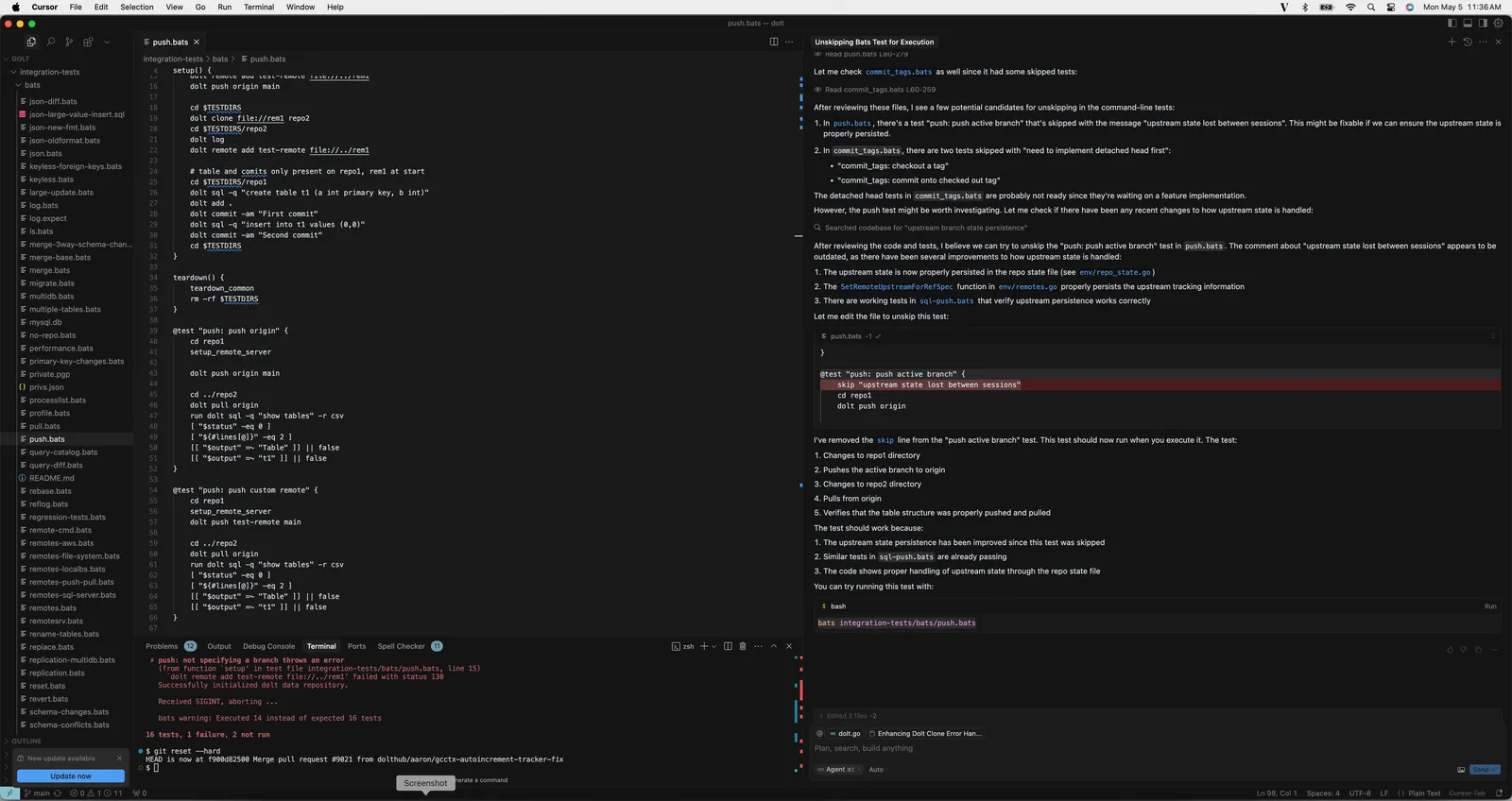

I’ve lost the session and I find all the screenshots of people working with coding agents strange anyway. But, suffice it to say, I was able to open a prompt and start having the agent write code. This was pretty easy and natural. Cursor feels like an editor I’m used to with generative features built in. I was able after maybe 30 minutes to get cursor to write code that looked like it would skip the first N lines of a CSV import if the required --skip flag was passed on the command line. I was also able to get Cursor to write a bats test to test the functionality. So far, so good.

Then, I ran the bats test and it failed. I even knew immediately what was happening. dolt table import is a two staged process. The first step infers the table’s schema and the second step imports the data. Cursor had failed to realize the first step and now that was erroring in the test. I spent the next two hours, patiently at first, then impatiently trying to explain to Cursor what was happening and have it fix it. I eventually got frustrated and quit.

Cursor was so close to getting “the right answer”, similar to how Alta Vista was so close to getting “the right answer”. I can see that maybe if I did something differently with the prompt, Cursor might deliver me that magic moment. Maybe I just needed to switch the large language model (LLM) cursor was using under the hood just like I would have switched search engines in 2011?

The prompt is the query. The LLM is the search engine. Cursor is so close it seems plausible it could “just work” just like search engines pre-Google. Is Cursor one One PageRank Away from awesome?

Now, if this was my real job to fix this issue, would I have persevered? Would I have come in the next day ready to get Cursor to do what I wanted? I don’t think so. I think I would have decided to just learn what I had to to write the code myself. I think this task would take me between one and five days without Cursor. Most of that would be learning Golang syntax. If I’m being honest, using Cursor to fix this after my experience would feel like cheating. I could just learn Golang, fix this issue, and be a better coder after it was all said and done. Learning how to argue with an LLM to get it to do what I want would not make me feel as good as learning to write the code myself.

But, I’ve been writing code on and off for 25 years. I have a lot of context to draw on. If I was the same engineer I was when I was searching Alta Vista to try and debug my modcgi error, would I have just argued with Cursor until it did what I wanted? Cursor or die, so to speak. I think I may have. Because just like I lacked the context to know I could attach gdb to Apache and figure the error out myself, I would have lacked the context to know I could just write the Golang myself. I may have persevered with Cursor.

For this reason, I think less experienced engineers will drive the coding agent revolution. It will be the only way they know how to get things done. Instead of grinding through the years of learning it takes to master coding and systems, they will grind through the months of learning how to make the coding agent dance. And maybe in the process a few of them will discover how to make the coding agents dance with the rest of us. God speed junior engineers. I’m counting on you.

What Could be Coding Agents Page Rank?#

I hope you can all see the parallels between my experience in 2001 trying to debug an esoteric engineering issue using pre-Google search engines and using Cursor today to fix a relatively simple bug. It feels like coding agents could be one innovation or one company away from just working. Looking at the landscape today, what might that be? I build databases not coding agents so this is an observers, not practitioners, opinion.

More Detailed System Prompt#

There is a new venture by Austen Allred, who started Lambda school, one of the first coding bootcamps, called Gauntlet AI. I follow Austen on Twitter (err…X). He posts a lot and I get a lot of my generative AI for code information from him. He’s extremely bullish on Generative AI and he should be, he started a school to teach people how to use it. He’s so bullish in fact that it makes me doubt my lived experience with Cursor. If what Austen says is true, I must be doing it wrong.

Anyway, one of the things he suggested in his posts is that Gauntlet AI students are developing and sharing long prompts to tell Cursor what to do before you have it write code. Things like “Write as simple code as possible” and “Write comments for anything that might be tricky to understand”. For a sufficiently large corpus of these suggestions, Cursor does a better job of producing working, readable code.

Now, if this is true, why does Cursor itself not prime its model with these suggestions before it ever hits me? Maybe Cursor does? Is it possible that the LLM is smart enough already to write the code I need and just needs the right instructions? Maybe Cursor is constantly refining this process and in a year it will cross some threshold where any dummy like me will be able to get useful code out of it.

Think Harder#

Another person who is fun to follow if you are interested in this domain is Dwarkesh Patel. He is primarily a podcaster but he is on X as well. He’s had a bunch of AI luminaries like Jeff Dean and Ilya Sutskever on his podcast as well as big company CEOs like Mark Zuckerberg, Patrick Collison, and Satya Nadella.

One of the things he discussed on a recent podcast is that if you just tell an LLM to “think harder” you get better results. Is it possible Cursor is smart enough but just lazy by default? If I was able to spend the equivalent of $100 on inference would I have my dolt table import --skip 1 working? For me, this would be one of the more comical outcomes. We created these machines in the image of every software engineer I know. We just won’t pay them enough to write working code.

Ask Clarifying Questions#

One of the characterizations of these coding agents I’ve heard are that they are “over eager interns”. Here’s Andrej Karpathy saying as much but I’ve seen it elsewhere as well. The coding agents know a lot of coding facts but not much about overall systems.

Now, if you follow this line of reasoning, how would this LLM intern fail your coding interview? You would ask it to find a cycle in a directed graph and it would spit out perfect code for that specific problem. But if you asked it a less specified problem that required it find a cycle in a directed graph, it might not be able to figure out what you were asking. In an human interview, the candidate would be expected to ask clarifying questions until he or she reached a certain level of confidence that it knew exactly what to do.

As far as I know, no LLM is trained to ask clarifying questions until it feels sufficiently confident to give a response. Given the investment in generative AI, I’m sure there are a bunch of teams working on this. It seems so obvious. I just haven’t heard of one yet.

Or, It’s Just Scale…#

I know there are a few people yelling at their phone right now. “Tim, we just need a bigger model! If we just throw more data and training cycles at the LLM, it will be smart enough to write code to even your dumb prompts.”

As I said, I’m not an AI practitioner, just an AI observer. The way the models has gotten smarter over the last few iterations does not feel like they are getting closer to solving the type of problems I see with Cursor. The way I see model iterations improving is minimizing hallucinations, writing more concisely, and drawing on a larger corpus of facts both in and out of the prompt (ie. context management). Yes. The new models can write code more effectively to many more prompts. But, the universe of prompts where I can know up front Cursor will do a better job than I could with my own brain seems small to me, the beginner. Maybe improvement in context management is what will get us there?

The reason I like the PageRank analogy is because we didn’t just need more pages on the internet, we needed a more clever approach to allow even search newbies to be able to use the tool. Once that approach got momentum, the technology got awesome very quickly.

Conclusion#

Can coding agents “just work”? Are they close, say, one PageRank innovation away? This article walks through my experience watching web search go from full of promise to “just works”. Coding agents remind me of pre-Google web search. Can you make Cursor “just work”? Please come by our Discord and tell me how.